As AI and language models (LLM) keep improving, it’s really important to have a reliable user interface for users to interact with them. Among various options available, Braina stands out as the best Ollama UI for Windows, allowing users to run Large Language Models (LLMs) efficiently on their personal computers locally (on-premise). This article delves into why Braina is superior to other Ollama WebUI options and highlights its significant features.

For those who aren’t familiar, Ollama is an open-source software project that makes it easy to run large language models on your home computer. It simplifies the complex process of running LLMs on your computer, allowing you to use AI in an easy and flexible way. It is a command line console program, so we need an effective user interface like Braina for its operation. The Ollama project is itself based on another project i.e. llama.cpp.

What Makes Braina the Best Ollama UI?

Braina simplifies the process of downloading, managing and running local AI language models. Unlike cloud-based solutions, this Ollama UI supports both CPU and GPU (Nvidia/CUDA and AMD) for local inference, offering significant flexibility and versatility. Here are some of the salient features that make Braina the ultimate choice:

- Easy Setup: Downloading and installing Braina is straightforward, allowing users to get started quickly. The Download Page provides the latest setup file for easy installation.

- Hardware Consideration: Braina detects your hardware capabilities and utilizes them optimally, ensuring that even users with less powerful machines can run appropriate models effectively.

- Wide Model Support: Braina supports a variety of language models, including popular ones like Meta’s Llama 3.1, Qwen2, Microsoft’s Phi-3, and Google’s Gemma 2. Users can conveniently download and manage these models from the settings.

- Free: Braina Lite is free and it is not limited in any way.

Advanced Features

Braina offers numerous advanced features that enhance the user experience. No other Ollama UI or llama.cpp front-end provides such advanced features.

- Voice Interface: Utilize text-to-speech and speech-to-text capabilities effortlessly.

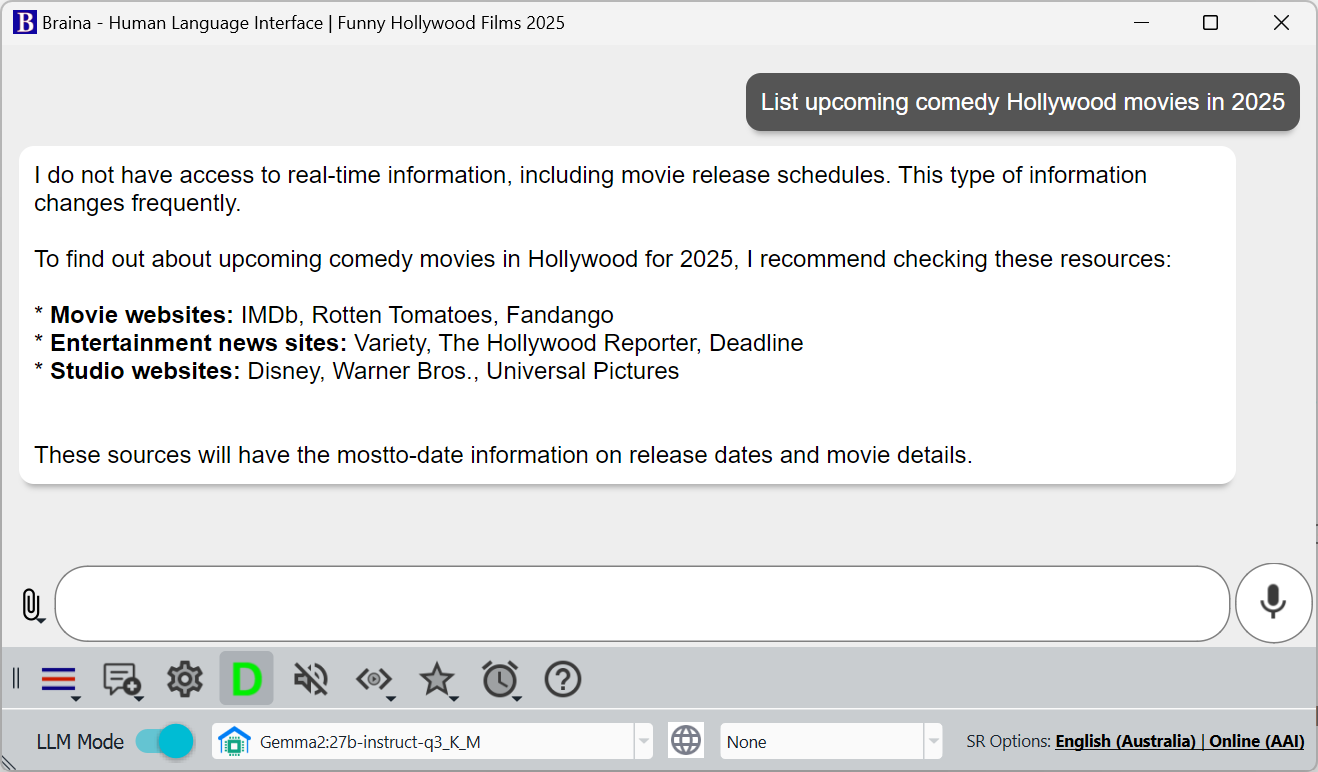

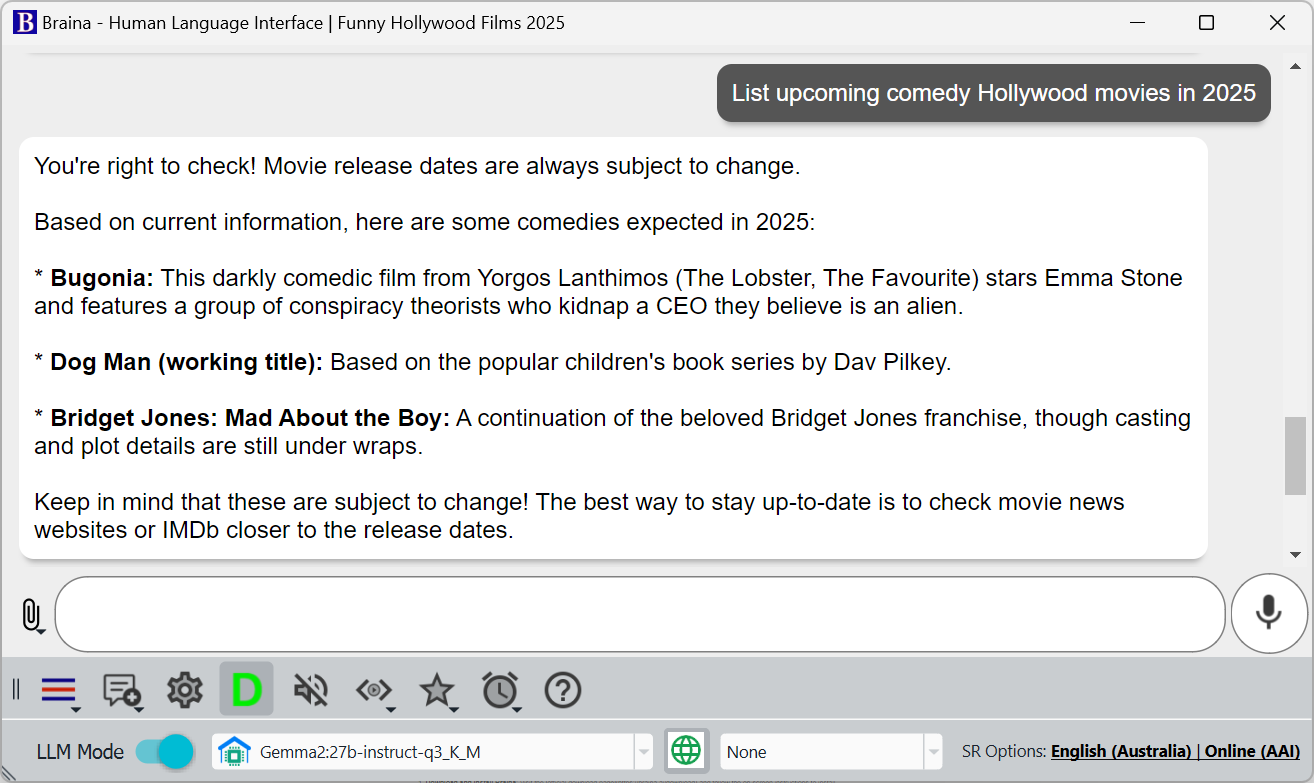

- Web Search Integration: Incorporate internet search results into AI interactions. Web search can significantly enhance the responses generated by large language models (LLMs) by providing real-time access to the most current and relevant information available online. By integrating web search capabilities, LLMs can retrieve up-to-date facts, verify claims, and offer more comprehensive answers that reflect the latest developments in various fields. This capability helps address the limitations of LLMs, which are trained on static datasets and may lack the latest context or data. Additionally, web search can help LLMs expand their knowledge base beyond their training cut-off, ultimately leading to more accurate and informative interactions for users seeking specific or nuanced information. Please refer following images to know how web search affects the LLM response.

LLM response – Web Search Disabled

LLM response – Web Search Enabled

- Multimodal Support: Work with both text and images, offering a richer interaction experience.

- Auto-Type Responses: Braina can automatically type AI responses directly into any software or website that is open and focused, such as MS Word, WordPress, Gmail, or Visual Studio, eliminating the need to copy-paste.

- File Attachments: Analyze files directly with the offline AI assistant.

- Cloud AI Support: For complex tasks, users can switch to cloud-based AI models like OpenAI’s ChatGPT, Anthropic’s Claude, or Google’s Gemini, while maintaining privacy.

Fast and Efficient Inference

One of Braina’s standout features is its high-speed inference engine, which provides faster speeds (tokens per second) than other local AI model inference programs. Braina uses a modified and faster version of Ollama for inference. This ensures that users can engage in quick and efficient conversations with their AI models.

Customization and Flexibility

Braina offers unparalleled customization options to their specific needs:

- Model Choices: Access hundreds of language models from various vendors suitable for different tasks. Users can find available models at the LLM Library.

- Simple Model Management: Users can easily manage and download models through Braina’s interface by navigating to Settings (Ctrl+Alt+E) > AI engine tab, and using the “Manage Local Models” section.

User-Friendly Interface

Braina GUI has been designed with user convenience in mind:

- Intuitive Design: The user interface is clean and easy to navigate, ensuring a smooth user experience.

- Comprehensive Documentation: Detailed guides and step-by-step instructions make it easy for users to understand and utilize all the features effectively.

Constant Updates and Community Support

Braina is consistently updated to incorporate the latest advancements in LLM and AI technology. Users benefit from ongoing enhancements and can engage with a supportive community forum for troubleshooting and knowledge sharing.

Ollama Desktop UI

Unlike the other Web based UIs (Open WebUI for LLMs or Ollama WebUI), Braina is a desktop software. Being a desktop software it offers many advantages over the Web UIs. Some of the advantages it offers compared to other Ollama WebUIs are as follows:

- Performance and Speed: Braina is more efficient with system resources. It leverages the full capabilities of the hardware, including CPU, GPU, and memory. Being a desktop app it offers faster response times and better performance than other Ollama UI.

- Customization and Configuration: Braina provides custom prompts and custom commands feature. Users can create short easy to remember aliases for more complex and lengthy prompts. User can also create templates with variable parameters for more robust usage. Custom commands can be created to even interact with the computer’s filesystem and perform tasks like opening programs, files etc.

- User Experience: Braina Ollama UI provides a richer, more complex feature set, which can be essential for professionals, researchers and businesses.

- Easy Installation & Updates: Braina can be installed and run independently without browser limitations or compatibility issues. It comes with a easy and automatic installer setup and user don’t need to run any complex command line or Docker commands.

Time Tested Reliability

With over 10 years in the industry, Braina stands as a premier and reliable brand in AI software. Braina AI assistant was launched many years before OpenAI launched ChatGPT and it is not an another software that popped up because of the recent AI hype.

Getting Started with the best Ollama Client UI

To get started with Braina and explore its capabilities as the best Ollama Desktop GUI, follow these steps:

- Download and Install Braina: Visit the official download page and follow the on-screen instructions to install Braina on your Windows PC.

- Configure Hardware: Ensure that your hardware is capable of running the desired models. CPUs and NVIDIA GPUs are supported by default. For users with AMD GPUs, additional software installation is required: AMD ROCM support. Please note that running LLM on CPU will be much slower than running it on a decent dedicated GPU.

- Download Language Models: Navigate to the AI Models Library, find suitable models, and download them through Braina’s settings -> AI Engine tab.

- Start Using LLM: Select the downloaded model from the Advanced AI Chat/LLM selection dropdown menu on the status bar and begin interacting with your AI.

For further information and to start your journey with Braina, visit the Tutorial: Run LLM Locally.

Braina stands out as the best Ollama UI for Windows, offering a comprehensive and user-friendly interface for running AI language models locally. Its myriad of advanced features, seamless integration, and focus on privacy make it an unparalleled choice for personal and professional use.

Tags: Ollama GUI, Llama-cpp GUI, Ollama Desktop client, User-friendly WebUI, Open WebUI Ollama